NIH encourages schools to teach rigor: Part II

The Emails

Preamble and caveats

These grantees are not “bad apples.” They represent a large population of researchers with the same incentives who would do the same thing in the same situation. The question is not how to get rid of these individuals. The question is how to fund metascience in a way that doesn’t fall to overwhelming incentives the way many other interventions have before.

Some of the grantees have done exceptional work. I will also highlight C4R’s anonymous “confession box” for researchers to post poor practices they've witnessed. Despite the small number of followers C4R had at the time, the box contains some important stories, on p-hacking by handing off an analysis, and refusing to correct errors even among coauthors.

Note: In the interest of full disclosure, I applied to be a curriculum developer with the project two years ago. The head of C4R, Konrad Kording didn't give me the job, saying that I was “not flexible enough” (true). Although I’m sure this experience makes me somewhat biased, as one of the few funded projects, C4R would have been interesting to write about regardless.

On using full names

I’ve chosen to publish full names because: 1. This is a publicly-funded project with non-anoymous participants 2. It’s difficult for readers to know that any of this is true without the subjects being able to google their own names and respond and 3. I have offered to withhold any names if there is a compelling reason such as future employment for a non-public figure, and I’ve given the right to reply here, or by a response that I will link to.

Corrections

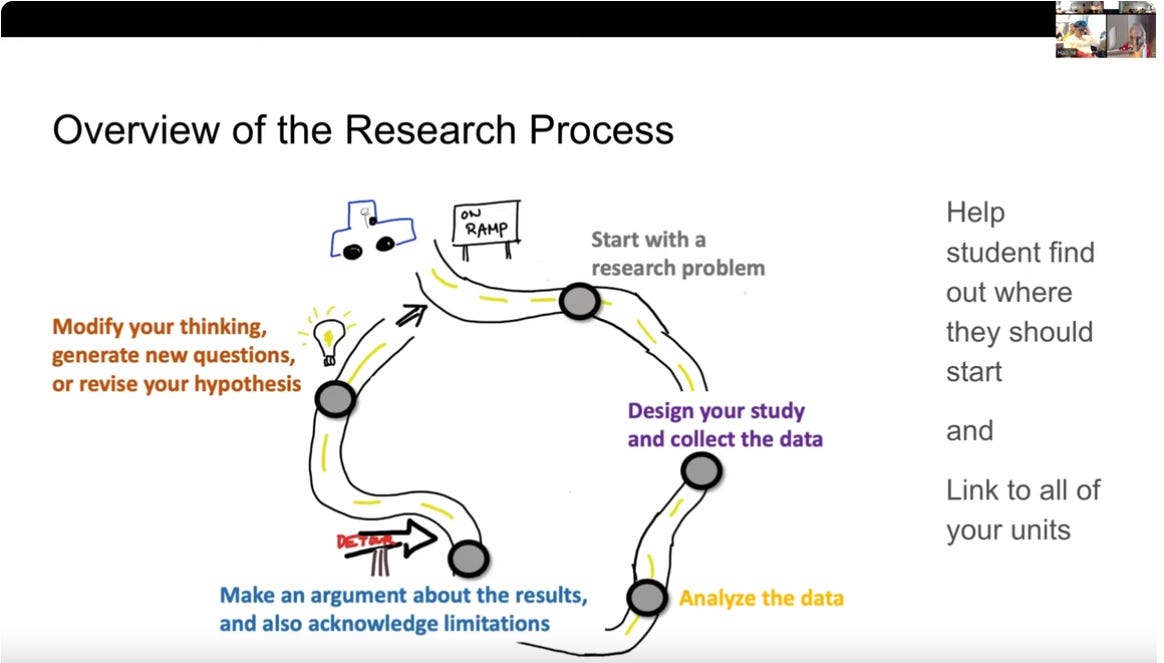

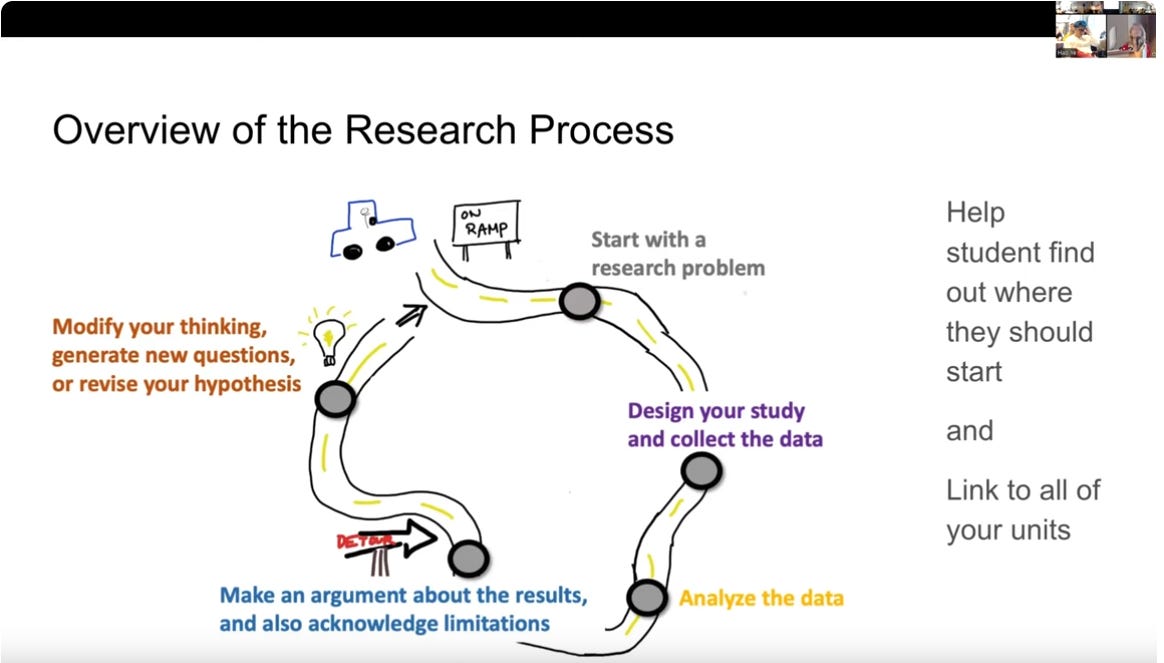

I have two corrections to these emails: Simine Vazire said that it was dangerous to ignore the loss of early career researchers with reformist views, not that it’s dangerous to your career to have reformist views. Although I think both are implied, this is a misquote. Additionally, the phrasing that “another” presentation recommends playing around with variables is confusing. The image of the research lifecycle is taken from the same presentation.

Summary of the emails

The email chains follow in chronological order. They are repetitious. This excerpt is all that’s necessary to understand my original objection:

I think nothing encapsulates this better than this slide from the annual meeting:

Contrasted with a slide from Dr. Crawford, originally from Munafò et al. (2017):

This second slide is mainstream metascience that has appeared in the literature many times. The C4R presentation, by contrast, suggests not coming up with a hypothesis in advance, analyzing the data exclusively after it has been collected, and “revis[ing] your hypothesis.”

Another presentation also endorses HARKing (“most published research is not truly confirmatory (and that's ok)”). Another says the material can be used in clinical trials but teaches “playing around with variables” and randomizing in R, apparently post hoc. Citation [is] generally lacking in these presentations and this R code is from “somewhere on the web.”

At one point, one of the speakers discouraged preregistration, another hallmark recommendation from metascience and pre-existing practice like RCTs.

Some of these projects don't seem to draw from any literature and represent at least a softening of the lessons from a very serious problem.

The Email Chains

C4R annual conference feedback

Nov 14, 2024, 2:21 PM

To: C4R, Shai Silberberg

Hello, I have been told this is the email for feedback on the annual conference. You may remember me from some community collaboration/curriculum developer meetings we had once. As an introduction, I've had experience with teaching metascience to researchers and I am familiar with the dynamics of introducing people to these topics.

I also just got back from a metascience conference where I witnessed the same thing I think is happening at C4R among some of the elite in the metascience community. That is, metascientists recommending the opposite of rigor as we understand it.

I'm not the only one who has noticed this. Daniel Lakens spent his keynote discussing the poor work that is being done in metascience. Metascientists don't always follow their own rules. In some cases they may succumb to the usual pressures to publish and protect their publication records. So there are signs metascience is facing a crisis. It could be that people aren't going to trust the field in the future. Dr. Koroshetz covered this happening to stroke research in his talk. Metascience might also begin to align itself with existing practice and take skepticism out of the equation.

I think nothing encapsulates this better than this slide from the annual meeting:

Contrasted with a slide from Dr. Crawford, originally from Munafò et al. (2017):

This second slide is mainstream metascience that has appeared in the literature many times. The C4R presentation, by contrast, suggests not coming up with a hypothesis in advance, analyzing the data exclusively after it has been collected, and "revis[ing] your hypothesis."

Another presentation also endorses HARKing ("most published research is not truly confirmatory (and that's ok)"). Another says the material can be used in clinical trials but teaches "playing around with variables" and randomizing in R, apparently post hoc. Citation in generally lacking in these presentations and this R code is from "somewhere on the web."

At one point, one of the speakers discouraged preregistration, another hallmark recommendation from metascience and pre-existing practice like RCTs.

Some of these projects don't seem to draw from any literature and represent at least a softening of the lessons from a very serious problem. I'm happy to go into those another time.

These materials are going to educate researchers who already have incentives to go back to the old ways. Some of them will be doing important work. Some of them might be running RCTs and an NIH project says they can play around with their variables. Should these materials have a disclaimer that they shouldn't be used in RCTs? I don't think that's a good outcome.

Also remember there are people now who are eager to criticize metascience or do their next story on how, ironically, the preregistration study wasn't preregistered. I think to the extent that it is in the spirit of skepticism and debate, criticism is good. But the backlash to metascience isn't going to be a few upstarts. It will have the weight of much of mainstream science behind it.

So I'm asking you not to let this project go forward in its current form. I joined the community and I am expecting materials to critique individually but I also think you all need to do this yourselves. I know you use the same definition of p-hacking that I do and you've read the papers on Dr. Silberberg's slides like False Positive Psychology so I think we are all equipped to do it.

Thank you for your time. I'm looking forward to future engagement and good luck.

Alex

Dec 2, 2024, 10:36 AM

From: C4R

Hi Alex,

Thanks for engaging with our annual conference presentation. It seems like engaging with our materials once they are prepared and ready for discussion would be mutually beneficial. Please look forward to invitations to focus group and provide feedback on our work!

Cheers,

Zac Parker

Dec 3, 2024, 10:39 AM

To: C4R, Shai Silberberg

Zac,

As I said, I'm happy to keep doing this but you should be doing it yourselves. Walter Koroshetz said the government can't decide what best practice is, that you all need to do it. If you are telling me that I have to do it, we have real problems. I didn't get a grant to do this. I don't work at the NIH or UPenn.

What I've said is that the lessons do not match the literature. I will except Boston University's presentation. Anyone qualified to teach researchers about rigor should have read the starred papers in the list below. Just like in any field, you have to acknowledge existing literature.

If you all want to focus on criticisms of these papers, those exist too. As far as I can tell, the lessons are not derived from either metascience or criticism of metascience. If I had to guess why they were written this way, I would say they're designed to be least confrontational. Maybe they come from lore passed around in research settings like trying a lot of analyses: "the natural selection of bad science."

Another entry in the genre of researchers coming to the rescue and having poor practices themselves has come out in The Atlantic. I don't think you all understand how serious this is, or how offensive it is to people working, many without funding, on this topic. I suspect this project will be added to the timeline of the reproducibility crisis.

All the best and good luck.

Alex

Dec 6, 2024, 1:01 PM

To: Walter Koroshetz

Dr. Koroshetz,

Your talk at the Community for Rigor kickoff is very inspiring to me and I recommend it often. I think the rigor community is in your debt. The same for Dr. Silberberg and Dr. Crawford, and I've told them this.

Unfortunately, I am writing you to report something that isn't working well. I have been emailing with Dr. Crawford, Dr. Silberberg, and Community for Rigor for a month trying to get a satisfactory answer to this feedback.

My first contact with community for rigor was almost two years ago when I applied to be a curriculum developer with Dr. Kording. I also donated some training material I did at University of Chicago as a community contribution. I am not a PhD but I am one of the few people who has trained researchers on reproducibility, metascience, and the replication crisis. I spend all of my waking hours on this topic.

Konrad said my materials were very good and we should at least find a way to collaborate. I didn't get the job and, as far as I know, my materials haven't been used.

My understanding of this field is that the number of people willing and able to produce training material informed by the reproducibility crisis is very very small. The reason why C4R exists in the first place is that these things are not taught in schools or in labs. So we have a serious chicken-and-egg situation. If you get PhDs to do this work, they will, in general, do a bad job. You are left with people like me who don't have the same incentives but nobody wants to listen to. In other words, this is not an easy problem to solve. We already knew that.

When the C4R annual meeting came out, I watched it. It was worse than expected. It was as if the same people who had been p-hacking and HARKing were now in charge, and they're going to teach others.

I sent an explanation with timestamps and screenshots to Dr. Crawford in November. I have written this up many times so I will reproduce what I wrote her:

"So I was surprised and not surprised to see rigor champion recipients endorsing HARKing ("most published research is not truly confirmatory (and that's ok)", "playing around with variables" and randomizing in R, dissuading people from preregistering, and generally sounding like they haven't done the homework.

I am reaching out to you because I really want to know how to solve this. You can be excused for thinking this email is all sour grapes. I don't think it is. I think we have to figure out how to fund metascience and not have it turn into its opposite.

What I would suggest is working against citation bias in the materials. For instance, this depiction of the research lifecycle doesn't include any of the safeguards like preregistration, power analysis, publishing null results and many others advocated by the original Rigor Champions paper, other projects, and NINDS itself. Instead it says to do analysis exclusively after data has been collected, and then "revise your hypothesis."

Maybe there should be outside speakers like Lakens or materials that address why these are difficult topics in academia. Rigor Champions is after all funding something that should be the domain of schools. I don't know the exact formula. I don't think this is it. I think the obvious response is that NINDS funded people with an extreme conflict of interest, as most academics have."

I also emphasized that people in the metascience community and the press have started to believe that metascientists aren't always rigorous themselves. Daniel Lakens addressed this in his keynote (p. 48-53) at a recent metascience conference. Since I wrote my first email, more articles have come out in the "Who will watch the watchmen?" genre. Community for Rigor fits into this phenomenon:

Fabricated data in research about honesty. You can't make this stuff up. Or, can you? (July, 2023)

The Distortions of Joan Donovan Is a world-famous misinformation expert spreading misinformation (June, 2024)

This Study Was Hailed as a Win for Science Reform. Now It’s Being Retracted (September, 2024)

The Business-School Scandal That Just Keeps Getting Bigger The rot runs deeper than almost anyone has guessed. (November, 2024)

I subsequently emailed C4R and Dr. Silberberg, and got what I would consider a "brush off." They implied that I should be policing this project through future focus groups. I am not funded and I don't work for UPenn or NIH. And I am not you, Dr. Koroshetz. If the obligation to critique this is being passed to people like me, then I don't see how funding metascience will work. I really would like funding metascience to work so I am asking you to do something about this.

Thank you,

Alex

Dec 14, 2024, 9:57 PM

From: Walter Koroshetz

Hello Alex,

Thank you for reaching out to me and for your kind words. As you know, NINDS remains deeply committed to developing a culture of scientific rigor. I have spoken with my staff, and they are keenly aware of your concerns. I can assure you that we will take your feedback into consideration as we move forward to develop a more rigor minded community.

Walter J. Koroshetz, M.D.

Director, National Institute of Neurological Disorders and Stroke

Dec 16, 2024, 11:29 AM

To: Walter Koroshetz

Thank you. I appreciate that very much. Dr. Crawford and Dr. Silberberg have been great in general. Looking forward to the rest of the project and hope you all have good holidays.

Feb 3, 2025, 11:35 AM

To: C4R, Shai Silberberg

Zac,

Do you have a timeline for releasing the materials or the focus groups? The estimate that's on the website is from September and it says, "Members of the C4R mailing list can expect to receive invitations to help beta test our materials over the coming months either in person at in Philadelphia or via an online focus group" and that materials would be made available on the website after the conference in October.

I have a general comment that it's difficult to see that anything is going on at Community for Rigor. I'm sure something is but there are no slack messages, blog posts, newsletters, updates to the repository or the web site. Almost all of the recent social media posts have been about Neuromatch, which I don't think is funded under this project.

The reason I'm asking for a timeline is that I would like to know if there are stages to the public release and the focus groups and what stage we're in. What stage are the materials that were presented at Society for Neuroscience in?

Dr. Kording presented materials at AIMOS in November. They are online here:

https://hms-wason-246-v2.vercel.app

but not linked from the website. As far as I can tell there are no authors listed so I assume this was done at CENTER.

My other question is about the state of the feedback I provided on the annual conference. Was it passed on to the authors? Were the authors able to respond?

As with any scientific output, particularly output that is supposed to inform future output like this material, the ability to read and respond is important! It seems like that would be the easiest thing for CENTER to implement.

To quote from the project description, "the average scientist fails to consistently follow the relevant principles." Adoption requires "broad community effort." It requires "buy-in from all stakeholders." The rollout "necessitates a tight feedback loop with the community." This is a "grass-roots, community-driven movement" with "bidirectional collaboration with the community."

As I said, feedback on the annual conference was solicited. I and maybe other community members have written it and it has been received. What I was told is the materials aren't ready. These are people who have spent their lives in education and in research. How much more time do they need? Are they aware that their materials aren't ready for feedback?

It's easy for me to believe that Community for Rigor has joined "the average scientist" as you say and failed to follow relevant principles, but I am willing to be convinced. Please let me know if there is anything I am missing.

Best,

Alex

Feb 5, 2025, 1:10 PM

From: C4R

Hello Alex,

All the information you're requesting is part of of our internal planning process. If you would like to stay up to date on our progress, please make sure to sign up to our mailing list.

Thank you,

Carolina Garcia

Community Engagement and Communications Lead

Feb 6, 2025, 7:46 AM

To: C4R, Shai Silberberg

Carolina,

Thank you for the reply. Can you confirm that I'm on the mailing list you're talking about? Is there an archive of past mailings? I have never gotten a newsletter so I assume none have gone out. I could be wrong.

Is there anything else I've said in these emails that C4R disputes? Are there outputs I have missed? If this is going to take more than a few days to compile, can you let me know?

Also, is there anything you can say about what happened to my feedback? From what you and Zac have said, it sounds like you're confirming that feedback is part of this project but I need to submit it at a later date.

I know being in the vanguard is difficult. If these were easy issues we would have solved them a long time ago!

Best,

Alex

Feb 12, 2025, 11:32 AM

To: me, Shai Silberberg

Hello Alex,

You are, indeed, on our mailing list and should have received our last communication regarding our upcoming focus group sessions and new social media channels. Please check your spam folder if you don't see them in your inbox.

Our focus groups are a great way to engage with our work and provide feedback. If you didn't receive that email, here's the link to register: https://forms.monday.com/forms/997c2ebc9606e2a35b9e6481577666f6?r=use1

Best,

Carolina

Feb 13, 2025, 8:20 AM

To: C4R, Shai Silberberg

Carolina,

I did receive the invitation, thank you. I believe this is related to Rick Born's presentation? I didn't have any comments for him from the annual conference but I have since read his paper "Stop Fooling Yourself," which I think is very good and I expect the module will be too.

I still haven't heard what happened to my feedback. I don't think C4R is embracing the community. This community is not only underfunded, it is also people who have stuck their necks out. Many of them don't get to work in academia anymore. As Simine Vazire said recently, reform can be "dangerous" for your career. Imagine how sensitive the community will be to you all sitting in silence as grant winners advocate p-hacking.

There is a paper that just came out that found 30% of researchers "endorsed p-hacking," among other things relevant to this project. This shouldn't be the rate for METER recipients and obviously we should engage them in friendly debate. This process is in its third month, so I will do it myself.

I'm also reminded of the "Grad Student Who Never Said No" blog post that bragged about p-hacking. See Brian Wansink's wikipedia page. (In that case, he was doing poor research himself, which I am not claiming about the presenters.) Wouldn't it have been nice to catch Dr. Wansink before he did all that research? I think that's the spirit of this project.

Thank you for the reply. Again, I hope things get better. Dr. Born's paper is good to see.

Alex

Feb 17, 2025, 1:13 PM

To: Mary Harrington (Smith College)

Dr. Harrington,

I'm responding to the request for comments from the Community for Rigor on the annual conference. In early November, I contacted NIH and Community for Rigor CENTER with this feedback. I was not able to find out if it was being registered or tossed out, so I am sending it to you directly.

The purpose of C4R is to develop training material on rigorous research. Although rigor is not precisely defined, NIH/NINDS list some specifics on their website and they have cited authors like Munafo, Ioannidis, Simmons, Simonsohn, and Nelson in presentations and wrote a paper about this project. My objections come from these sources, although I think it's not always necessary because many of the principles of rigor are reflected in federal law such as those around conducting RCTs.

Please let me know if you have other citations or other arguments for your work. I hope you will take this in the spirit of debate.

I also think you should consider that this project started because academics are doing something wrong. It will be very plausible to your audience in the metascience community that you are doing something wrong. An example of this is Brian Wansink who is well-known in the reform community. He posted an endorsement of p-hacking online, defended it continuously despite considering a lot of feedback from his audience. That was 2017, but many experts (30%) still endorse p-hacking.

What you've depicted here is the usual research lifecycle practiced in labs. It is optimized to increase researcher degrees of freedom, publication, and funding.

Compare this to the research lifecycle presented by C4R program officer Devon Crawford about the project:

This is originally from Marcus Munafo who is also a coauthor on the original study that started C4R.

The differences should be obvious to people who will be reading your material. Researchers should change their minds when they encounter new evidence, but you say "revise your hypothesis." In other words, yours is a depiction of HARKing. NINDS says that part of experimental design is "Clear identification of planned exploratory (hypothesis-generating) and / or confirmatory (hypothesis-testing) components of the study."

You put data analysis and "make an argument about the results" after data collection. Not, as most researchers in rigor would endorse, preregistration, pre-analysis plans, or as NINDS says, "Prospective specification of statistical methods to be used in analysis and interpretation of results, including considerations for multiplicity."

You say to start with a "research problem." Canonized throughout the history of science, RCTs, and rigor literature is the idea that you start with a hypothesis.

What you are proposing to teach is more or less the opposite of the intentions of this grant and the rigor literature and you don't provide citations yourself. This is without mentioning the glaring omissions such as any boundaries on design, analysis, or reporting.

I sent a reading list to CENTER around two months ago:

I don't have anything from CENTER saying that training should cite literature, or whether or not they agree that these are papers they would endorse other than the fact that some of them have been featured in presentations from NIH such as Shai Silberberg's at the same conference you presented at. Specifically, Simmons (2011) and Gelman and Loken (2013) address these issues.

I think you should write your materials with these papers in mind, or cite the literature you are drawing from. If you are drawing from laboratory experience, then I'm suggesting you are recreating the research process this project is trying to work against. It should be obvious to the people working at C4R and I have urged them to tell you.

I hope you will see this message as productive and beneficial. I'm not out to denounce you. I don't think the rigor community has something to gain from denouncing individuals, particularly ones who are making an honest mistake. It is very relevant, though, whether or not projects like this will work and feedback is a big part of that, as Dr. Silberberg and others have suggested.

Best,

Alex

Mon, Feb 17, 1:13 PM

To: Benedict Kolber (UT Dallas)

Dr. Kolber,

NIH/NINDS requested feedback on the Community for Rigor annual conference. I provided it to them in November, but I have not been able to find out if it was passed on to the authors so I am sending it directly.

I disagree with your framing of confirmatory/exploratory research as a spectrum because it complicates the important issue, which is disclosure. As NINDS puts it, it is important to have "Clear identification of exploratory (hypothesis-generating) and confirmatory (hypothesis-testing) components of key supporting experiments."

You go further than that, though. In your slides, you say, "most published research is not truly confirmatory (and that's ok)." That is not ok and not endorsed by the rigor literature or NINDS. Research the government thinks is necessary to protect human health like RCTs is strictly against this. Can you say that if RCTs looked confirmatory but were really exploratory, that's ok?

As I've told your colleagues, all this would take to fix is requiring citations. Are there papers that will support the idea that most research, although it looks confirmatory is actually exploratory and it's fine?

I'm including a reading list, which I also sent to C4R months ago. One of the top papers in the rigor literature "False Positive Psychology" was cited by NINDS at the same conference where you spoke. Do you disagree with this paper or any of the others? If so, I would urge you to cite other papers or write one of your own.

I hope you appreciate this in the spirit of scientific debate. It could also be an honest mistake. I expect there will be critiques in the future from the rigor community that you can avoid.

Best,

Alex

Mon, Feb 17, 1:13 PM

To: Mark Kramer, Uri Eden (Boston University)

Dr. Kramer and Dr. Eden,

NINDS requested feedback at the Community for Rigor annual conference, which I watched on youtube. I've sent a few similar emails to NIH and CENTER so you may have already received this in some form.

I'm trying to be rigorous and include good with the bad. You're one of the goods! I thought your presentation was great. I'd love to see this as an RCR course or "scaled up" somehow. Also, the distribution here suggests you could do a study like "Medicine's Uncomfortable Relationship With Math." Very encouraging output for this project.

Best,

Alex

Mon, Feb 17, 1:13 PM

To: Richard Born (Harvard University)

Dr. Born,

I am emailing participants in the Community for Rigor who presented at the annual conference. For completeness, I'm including positive comments.

I didn't have any feedback for you from your presentation but I've since read "Stop Fooling Yourself!" which I think is great and I passed this on to CENTER and NIH. Looking forward to your module.

Best,

Alex

Feb 18, 2025, 9:22 AM

From: Mark Kramer (Boston University)

Hi Alex,

Thanks for the positive feedback. I’m happy you enjoyed the presentation. In case useful, you can find our draft material here (which includes the sample size calculation among other topics).

https://mark-kramer.github.io/METER-Units/

We’re always interested in continuing to expand this education work!

Mark

--------

Mark A. Kramer

Prof. Math & Stats, BU

Feb 18, 2025, 12:45 PM

To: me

Dear Alex,

Thank you for taking the time to share your thoughts on the C4R annual conference and our materials. We appreciate your engagement and the perspectives you've contributed.

We have shared your feedback with the relevant teams, and as with all input we receive, it will be considered alongside other factors as we continue refining our work. Our team is working collaboratively to make decisions that best align with the goals of the project.

At this time, we don’t have further updates to provide, and we kindly ask that you refer to our public communications for any future developments. Additionally, to keep communication streamlined, we ask that you direct any further inquiries to this email rather than reaching out to individual team members.

We appreciate your understanding and your interest in this work.

Warmly,

C4R Team

Feb 19, 2025, 2:46 PM

To: Shai Silberberg

Dr. Silberberg,

I wanted to forward this to you for the public record and I'm hoping it's interesting.

I'm a big fan of this project despite how it might sound. If all C4R accomplishes is the "confession box" on twitter and Boston University's module, I would be happy. I don't actually care that much how metascience gets funded as long as it's moving forward.

The problem is the things that are backward. 1. the statements from the annual conference that were offensive to existing work on rigor and 2. the stonewalling.

Future projects should engage with the community on the substance of their feedback, make sure feedback is officially and if possible publicly recorded, and give anyone who may be criticized the right to respond. None of that was done here.

I could have gotten all of this by posting comments on youtube instead of sending emails to NIH and C4R. Plus future projects might learn from this one.

Instead, I've been emailing everyone under the sun all the way up to members of congress to try to correct something that should have been instantaneous. Someone could have raised their hand at the conference. Someone could have sent an email to the authors. They could have issued a correction or a follow up or anything other than what was done, which is just to avoid the issue as long as possible. It's not comforting for future funders and supporters of the field.

Thank you for your work. I do appreciate it. I have endorsed the rest of C4R and NINDS many times and I will certainly do so again.

Alex

Feb 20, 2025, 4:26 PM

To: me

Hi Alex,

Thank you for sharing this with me.

Best wishes,

Shai

Mar 13, 2025, 12:14 PM

To: C4R, Shai Silberberg

Dear C4R,

I was delighted to see you released the first module. As I said, I was expecting it to be good and it was.

I was not happy to see that some of the videos of the annual conference have been taken down. What was the reason for this?

I was not able to sign up for the feedback sessions because the form had been closed before the Tuesday session. I don't think this is a major issue but it would be nice to know when the deadlines are.

I reviewed the slides for the 3 hour confirmation bias module. My feedback is below. I would like some kind of confirmation that the feedback has been received and I would like to know what will happen to it. Is it going to be forwarded to the authors? Do the authors have the right to reply?

It may be hard to believe, but I have been very positive about this project in general and I've sent many emails to that effect. The promised tight feedback loop with the community is a major exception. You can't just do what you're accusing others of doing and only accept information you like. I'm going to keep pushing on this because it undermines everything you're doing and there are plenty of examples of metascientific projects being co-opted, some of which I've already sent.

Confirmation Bias Module Feedback

I like the idea of seeing what other participants submitted. It gives the reader some incentive to participate and it may make people feel they're not alone in their choices. IRBs may not like this because it can be viewed as human experiment. It also has the chance of inhibiting responses, and the usual dangers of unmoderated user-generated content apply.

Deduce the number rule activity:

Trying something that doesn't fit the pattern is not the same as trying something that doesn't fit the pattern you were thinking of. The distribution could easily be the result of users truly trying to falsify their hypothesis but not finding a falsifying pattern until the end.

"Ask an LLM" might seem a little dated if LLM enthusiasm starts to be associated with the mid-2020s. Certainly saying this without any caveats about hallucinations will seem dated. It is also introducing a controversial topic without much need for it.

The slides talk about H1 and H2 but the activity addresses refinements to H1 on parsimony and causal inference.

People outside neuroscience won't understand some of the terminology. Are there plans to translate this for other fields?

"Pick the most biased choice" activity:

Agreement with the majority is not quantified and it should be used with caution. Most people p-hack. Most people don't mask or randomize according to the paper that inspired this module.

Slide 48 is very unclear. By "The study favors H1," do you mean the researchers favor H1? The study data favoring H1 also makes "H1 likely to be supported" so this is confusing.

"The myriad choices researchers have when designing experiments..." (Slide 49):

The design stage is not necessarily or predominantly where there is a lot of freedom. The slides don't mention post hoc choices. A later slide (50) backs this up as most of these are post hoc and slide 66 mentions blinded analysis.

"Researcher degrees of freedom" was coined earlier than 2014, in 2011 with False-Positive Psychology: "The culprit is a construct we refer to as researcher degrees of freedom." Gelman and Loken is cited instead. Gelman and Loken is an excellent contribution but it focuses on choices made before a p-value is seen. Many choices can be made after.

The unit doesn't mention the combinatorical nature of researcher degrees of freedom. If you have three choices at one stage and three at the next, that's 9 total choices and of course it gets larger quickly from there.

Pre-registering a hypothesis is good but pre-analysis plans are just as relevant. Registered reports is arguably easier on the investigator (a publication is guaranteed after the design is accepted by a journal).

"We minimize confirmation bias..." (Slide 52):

One of the most relevant proposals on confirmation bias that goes back to Kahneman is adversarial collaboration. Later incarnations include prereview and red teaming. You hint at it earlier in the module. "Peer review" will usually conjure journal peer review.

What is the math behind the "Explore the impact of bias" activity? All of the probability of significance is clustered around a small range controlled by the bias. For n = 250 and bias 0.15, the probability of significance at all levels is 1 for true effect size greater than 11, and zero for effects less than 8. These are also not plausible values for Cohen's d.

Parkinson's drug activity:

Overall this example is very good and gets into practical concerns.

It seems to imply you're going to get a null result if you mask. The bright side that you may get a trustworthy positive result could be highlighted.

The unblinding section doesn't address unblinding even when the treatment doesn't have any effect: sugar pills that taste sweet, treatment injection that is a different color from the control etc. (Slide 77)

In "How might the mask fail?" it's not clear if the advice is coming from other users.

Well done on exploratory vs confirmatory disclosure (slides 96 and 97). I would argue it's still not ok for most research to be exploratory since most research does not disclose that it's exploratory.

Overall, the module is well-cited and the citations back up the slides. Maybe you don't want to self-cite too much but connecting this to Dr. Born's "Stop Fooling Yourself" paper at the end might be helpful because papers are more likely to be cited in future work than presentations. I would recommend showing a how-to-cite for the presentation as well.

Best,

Alex

Mar 13, 2025, 3:57 PM

To: me, Shai Silberberg

Hi Alex,

Thank you so much for the kind words and feedback!

While I understand your frustration with feedback not being incorporated as quickly as you’d expect, we are doing our best to hear from as many scientists and researchers as we can to provide a universally valuable product that is fun, engaging, and helpful. That does mean as we’re releasing the first units, it’s going to take longer than we hope to gather, analyze, and incorporate feedback since it is the first time we’re doing it.

Your feedback will be grouped in with all the other feedback we gather about the unit over the next few months. We’re actively looking for people who are willing to use our units in their classrooms or labs so we can evaluate not just the scientific accuracy but also the ease of use and enjoyment. The UX researcher on our team will collect as much information as we can, and come up with a set of recommendations for how we can improve the unit accordingly. We may reach out to the METER team that helped us complete the unit to ask for ways to address feedback if we aren’t sure how to do it ourselves, but ultimately it’s the decision of the team at UPenn to make the final call.

I’ve personally read through all your feedback and agree with a lot of the points you made. When it comes to translating to other fields, that’s something we are actively looking into (e.g. How different do the examples need to be before it’s legible to an audience in a different field? How many versions of a unit should we make to cater to different fields? What is the next field we should consider updating the unit for?). Personally, I’d like us to have a few more units completed for our neuroscience audience to enjoy before we update for additional fields.

Again, thank you for the kind words and thorough feedback. It is really encouraging that we already have so many passionate members.

Please be on the lookout for upcoming units we’ll be releasing in the next couple of months on controls and randomization, followed by a unit on writing reproducible code.

Warmly,

Thomas and C4R Team

Mar 14, 2025, 10:14 AM

To: C4R, Shai Silberberg

That's lovely, Thomas. I do appreciate the reply and C4R's work on this.

I don't think there's a response in there on the youtube videos but I will rely on my priors. I'm sure you know nothing disappears on the internet. Removing things often makes it worse.

On other fields, I agree that neuroscience is the NINDS priority. I hope that translation happens even if it's to generic terms everyone can understand. That said, I'm sure other fields can get something from it now.

Looking forward to further refinement! Please send everyone my best.

Alex

May 27, 4:10 PM

To: C4R, Shai Silberbs, Walter Koroshetz, Devon Crawford, Konrad Kording

C4R,

As always, hope you are doing well. On June 9th, I will publish this email thread with the blog posts attached at redteamofscience.com. There are three issues I'd like to ask you about. Feel free to say no comment as you like. I will either quote you in the post, or publish a long response if you'd prefer. If anyone has individual concerns or would like me to withhold their name, please respond even if you don't want to CC the whole group.

1. I would like to publish the video of Dr. Kolber's presentation and Dr. Harrington's presentation from the C4R annual conference. I would still like to know why the original videos were removed. If there's a compelling reason not to republish them then I won't (that they are being criticized is obviously not a compelling reason).

I believe this is protected by fair use given that they are short clips for criticism and educational purposes.

2. You asked me not to contact the grantees directly. I will need to contact Dr. Kolber and Dr. Harrington to notify them about the post. Same question for this. Why did you ask me not to contact them?

3. As you know, one of my objections to this project is the solicitation of feedback followed by lots of behavior that seems to prevent feedback. In order to participate in the feedback sessions, community members need to sign a waiver that has an ominous connotation:

"Participation in our research initiatives may pose some risks to you and your privacy. In extreme cases, when your responses are collected in a group session, others may be able to cause you hardship such as embarrassment or loss of employment on the basis of your responses. This risk is about the same as any time you speak in a professional capacity."

Throughout this process, it seemed that the grantees (METER recipients) have been protected from critiques of their work. It's ironic that there should be any barriers for community members at all, let alone this particular risk. I noted that an IRB was likely involved in the post. Do you have a response to this?

Any feedback or objections are welcome. Please keep in mind I'd like to publish any responses with the blog post and I have seen debates like this get lost in the weeds to the point where there are about 3 people on earth willing to wade through it. I don't think this serves anyone's purposes other than to further kick the can down the road. I would like everything to be as clear as possible.

Best,

Alex

May 28, 2025, 10:26 AM

Hi Alex,

Thank you again for being an active part of our community! We welcome constructive feedback and have mechanisms in place to address it, but we cannot guarantee that all users’ ideas will be reflected in our materials. That would unfortunately be impossible to implement.

Regarding the consent form you refused to accept, it is standard practice for testing of this kind; we have conducted several focus groups with hundreds of attendees who have agreed to it without reservations. That said, anyone is free to refuse it and therefore decline their participation.

If you are intending to republish the videos, you’re correct, you’ll have to contact the UTD and Smith teams directly. I believe their university profiles are public. We obtained their written consent to use their likeness before posting anything with them in it, so I’d encourage you to do the same. The same applies for use of our team’s likeness, image, names, etc.

Warmly,

Thomas

Thomas McDonald (he/him)

Director of Technical and Research Operations

Community for Rigor (C4R) - Kording Lab

May 29, 2025, 2:23 PM

To: C4R, Shai Silberbs, Walter Koroshetz, Devon Crawford, Konrad Kording

C4R,

That may be that hundreds agreed, although they are asked to verbally agree while being recorded in front of their peers. Informed consent should be free of coercion. You admit speaking in a professional capacity comes with risks but you ask for consent while everyone is speaking in a professional capacity.

Additionally, I did not say in the attached blog posts that I didn't agree to the consent form. You are revealing that information and it is obviously considered private. (Others revealing their consent in front of their feedback session on zoom also has this problem.)

Do C4R, or any of the parties on this email have a comment on the above two paragraphs? Is this the process in general, or only what I experienced? The point I was making in the post is that it is ominous. It comes off as a threat. Not only can it be dangerous to speak in public, interest in scientific reform is regarded as risky for your job. In other words, the point I am now making is that your response is reinforcing that view.

On the topic of use of names, images, and likenesses, please be clear about what you are saying and whether or not you speak for everyone CCed.

I offered to withhold anyone's name with the understanding that this doesn't really apply to public figures, and that, in general I am free to comment on matters of public interest using people's names.

There are also laws against using names for "exploitative purposes." See https://www.dmlp.org/legal-guide/using-name-or-likeness-another

Are you claiming these blog posts are for an exploitative purpose? Or are you asking me to withhold everyone's name? What you wrote sounds like I need the same kind of agreement you had with conference participants. I don't know what was in that agreement but my relationship with you all is not the same as your relationship with the participants.

On likenesses and images, I am not publishing anyone's likeness who is CCed here, nor any images that they own as far as I am aware. The only thing that could be interpreted this way is an image from one of Dr. Crawford's talks, which is from a previous paper and done in her capacity as a federal employee. The video clips have only a tiny view of the audience in the corner that can also be seen in the remaining video of the conference still on youtube.

If Dr. Kolber and Dr. Harrington revoked their permission based on this agreement and that's why the videos were taken down, please say so.

Just to emphasize, I don't think I need to ask for permission to publish any of this. The reason is that C4R is a publicly-funded scientific project and commentary on it is in the public interest. The point about fair use applies even if there's a proprietary component to the project (copyright). I assume this does not apply.

I will notify Dr. Kolber and Dr. Harrington and request their participation.

Alex

[No further emails have been received as of June 9th.]